In the primordial pattern literature, Christopher Alexander recommends a granularity gradient for the size of windows along the story levels of a house (see the pattern “Natural Doors and Windows” in [1].)

...the Product Owner has laid out a release vision as a Product Backlog, taking the market value of each item into consideration, as well as cost estimates from the Development Team.

✥ ✥ ✥

Small Items are easiest to estimate, but breaking down work items is a lot of work in itself. The work estimates for small product increments and tasks have less error than for larger ones for three reasons:

- The magnitude of the work (and therefore of the possible error) is smaller than for a larger increment or task.

- The team can better understand smaller deliverables and tasks than larger ones.

- The percentage error on smaller deliverables and tasks is less than for large ones because there is less of an element of guessing (and the maximum size of any error is implicitly mitigated.)

If the team has not broken down large Product Backlog Items (PBIs) into small ones, the estimate for each one will be coarse and imprecise. It is easy to believe that any estimates are better than no estimates—and that may be true. It is also easy to avoid the discipline of going the extra mile to estimate at a finer level. You can get into analysis paralysis if you spend too much time on estimation. Furthermore, those who want to manipulate schedules can too easily use detailed estimates to micromanage developers.

It is important that the Development Team reduce the estimation error as much as is reasonable so it is confident in meeting its forecast to the Product Owner, and so the Product Owner can in turn meet his or her commitment to the market. It takes work to break down large requirements into small ones. That work takes time, time that is waste in the Value Stream. Furthermore, a Product Backlog with many items is hard to manage.

For near-term items, the effort the team spends estimating work is worth the cost: the market will remember a schedule slip longer than it will remember how much it paid for an item.

However, emergent requirements can invalidate even the best estimates: changes in the market, in technology, or team staffing. The longer that time goes on, the higher the likelihood that such changes may emerge. The more distant a PBI is in time, the lower the confidence in the estimate. On the other hand, one can use lead time to one’s advantage; long lead time means more time to reduce the uncertainty that comes with any new product increment idea. Boehm ([2], p. 311) has shown empirically that estimates may be too large or too small by a factor of as much as four until the team is able to detail requirements, and such detailing takes place over time. But because of changes in the market and other factors in the environment, breaking down long-term requirements is not enough and, in general, there is nothing that one can do to estimate long-term requirements with certainty. And just the passage of time isn’t enough ([3], p. 38). The team must be continuously incorporating new insights they gain: into the state of the market, into the team’s own changing level of experience, and into the ongoing changes in the product itself. This is one reason that long-term waterfall development cycles miss the mark. Yet even in the short term, the best of estimates fall victim to emergent requirements. Such requirements may double the number of tasks or overall effort required to meet a market need.

Processing large batch sizes increases the variance in the process. To reduce variance, reduce batch size ([4], p. 112).

Relatively worthless components of a development increment can easily hide in a larger PBI, but become visible when considered on their own merits after breaking down the larger PBI.

Therefore:

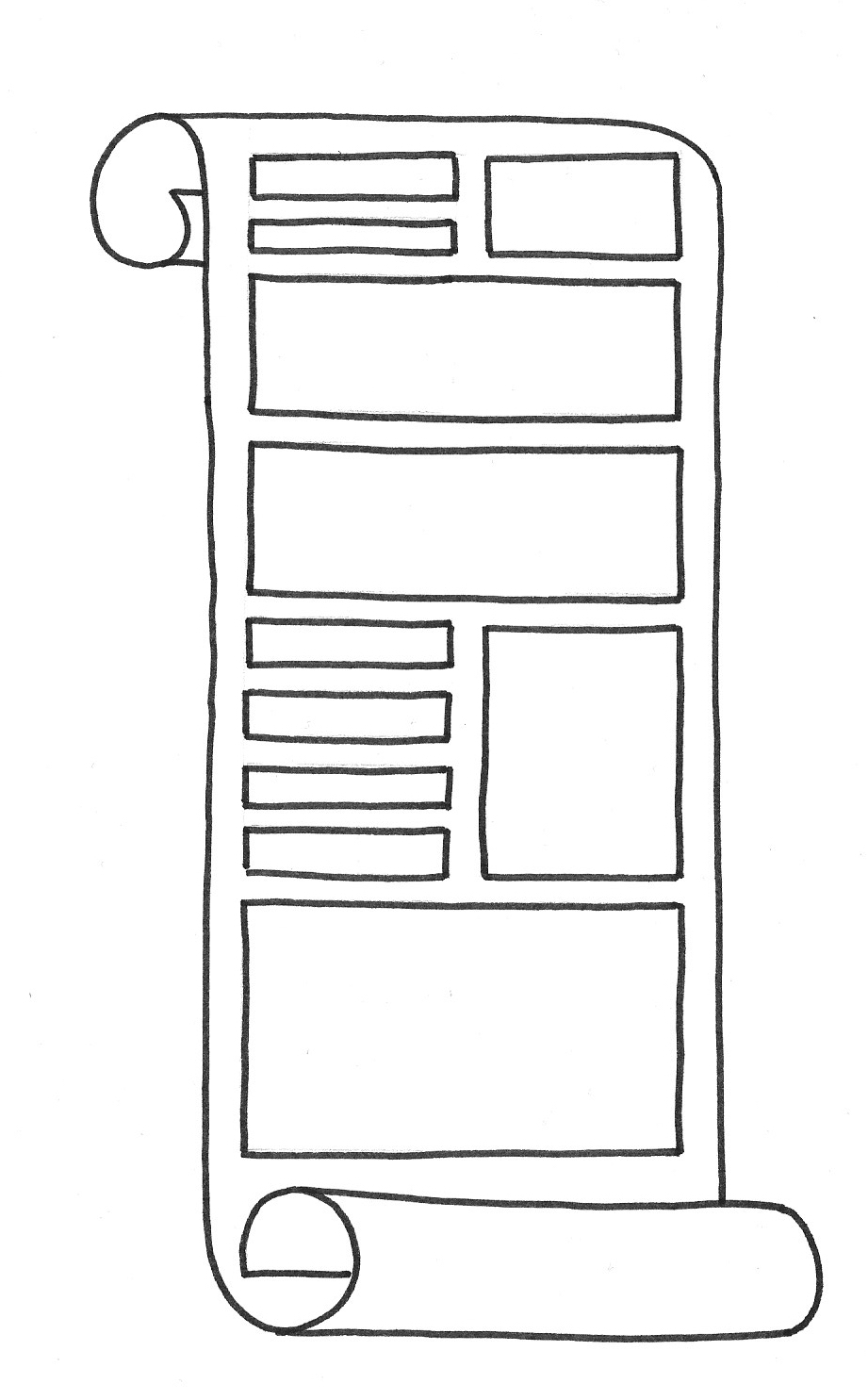

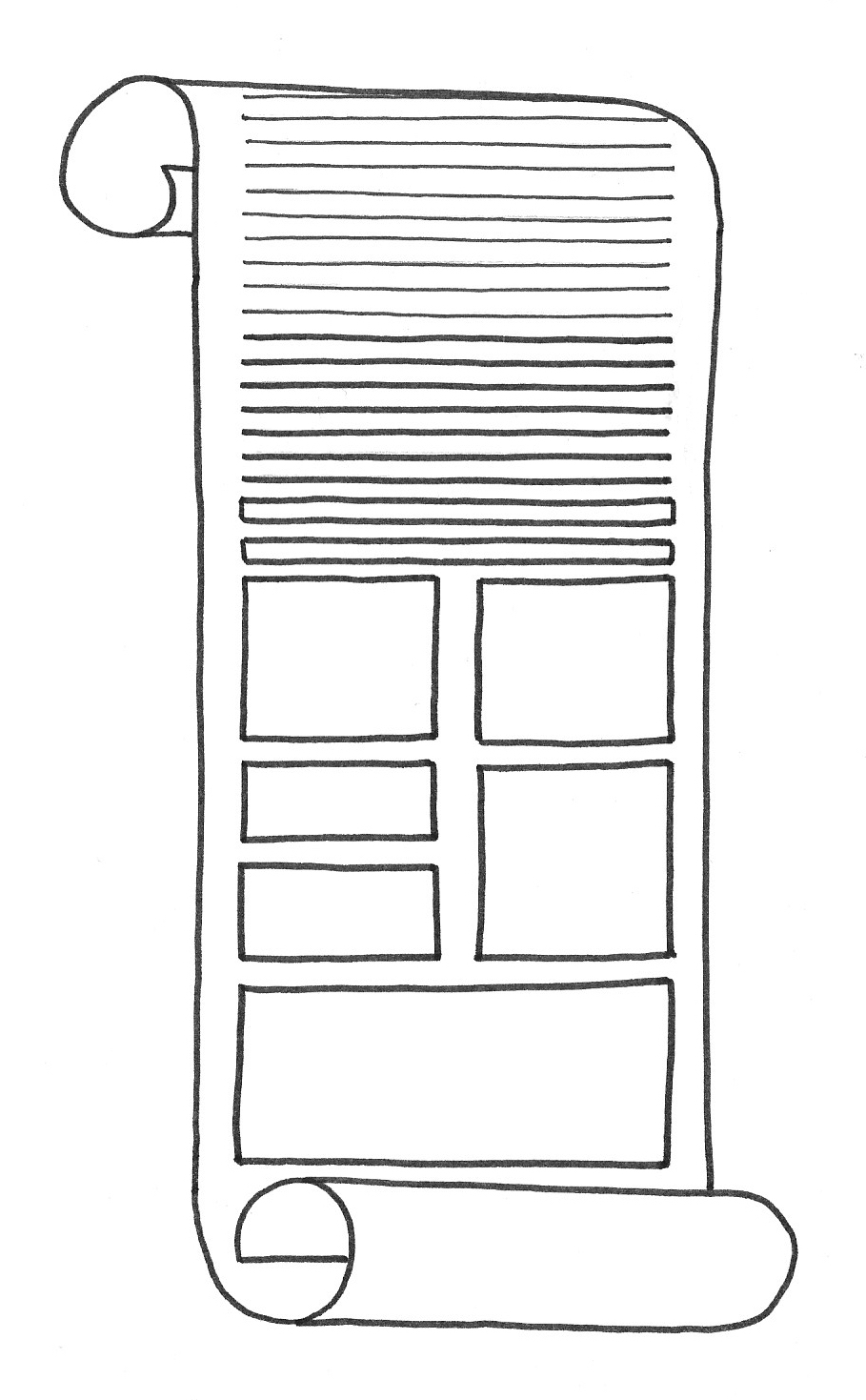

Break down the earliest PBIs into Small Items of half a Sprint or less of work for an individual (about 10 percent of the total Sprint work) each. The team should break down later PBIs so that their size is proportional to their depth in the Product Backlog. A PBI that lies more than four or five Sprints in the future may be arbitrarily large and may be little more than a whim of what the feature should do.

Said another way, no PBI within the top two or three Sprints should take more than 10 percent of the total PBI effort for that Sprint, and keeping them below 5 percent of the total effort is even better. Even if emergent requirements cause the estimate to double during the Sprint, the team can still complete and deliver many PBIs.

✥ ✥ ✥

Breaking down the Product Backlog this way lays a foundation for a more fully Refined Product Backlog (towards Definition of Ready). Small PBIs and small Sprint Backlog Items both reduce risk by reducing the leakage cost if the team fails to take any single unit of delivery or work to completion. See Small Items.

With a gradient of size, the Product Backlog effectively offers a top-down view of the items that are closer to the bottom and a bottom-up view of those closer to the top. This is in-line with the recommendation of ([5], p. 143), which says to “[p]refer top-down estimation in the early (conceptual) phases of a project and switch to bottom-up estimation where specific development tasks and assignments are known.”

Estimate all the PBIs, including the ones for the distant future. Refresh those estimates less frequently than those whose release time is more imminent. Accept higher estimation uncertainty the further that work lies in the future. Do not waste the effort of breaking distant PBIs down, since unplanned changes are likely to overshadow the increased precision. But remember that perhaps the main purpose of estimation and backlog refinement is to get the team to start thinking about the forthcoming feature; see Refined Product Backlog.

Avoid estimating at a level of granularity that would lead to micromanagement. Estimation Points are the preferred method, but with simple math even those can be converted to hours for any given Sprint. Some Scrum folklore recommends estimating to the granularity of two hours; in experience, we have found such fine estimates to be self-fulfilling prophecies in complex emergent projects, and estimating to the granularity of about a day is enough.

This is a broadly accepted practice.

[1] Christopher Alexander. Natural Doors and Windows. In A Pattern Language. Oxford, UK: Oxford University Press, 1977, pattern 221.

[2] Barry Boehm. Software Engineering Economics. New York: Prentice-Hall, 1981, p. 311.

[3] Steve McConnell. Software Estimation: Demystifying the black art. Redmond, WA: Microsoft Press, 2006, p. 38.

[4] Don Reinertsen. The Principles of Product Development Flow: Second Generation Lean Product Development. Redondo Beach, CA: Celeritas Publishing, March 29, 2012, p. 112.

[5] Adam Trendowicz and Ross Jeffery. Software Project Effort Estimation. Basel, Switzerland: Springer International Publishing, 2014, p. 143.

Picture credits: The Scrum Patterns Group (Mark den Hollander).